| Author: | gone369 |

|---|---|

| Views Total: | 0 views |

| Official Page: | Go to website |

| Last Update: | April 22, 2025 |

| License: | MIT |

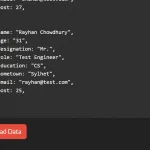

Preview:

Description:

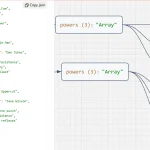

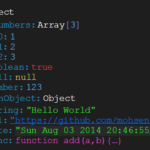

json-spread is a JavaScript library that takes a nested JSON structure, flattens it, and then duplicates objects based on the elements within any nested arrays.

Its main purpose is prepping hierarchical JSON data—the kind you often get from APIs—into a flat, row-column format suitable for CSVs, TSVs, spreadsheets, or feeding into data tables.

How it works:

First, it flattens the JSON, converting keys like { "a": { "b": 1 } } into "a.b": 1 (using a configurable delimiter). Then, the key part: if it encounters an array during this process, it creates separate flattened objects for each item in that array, merging the rest of the parent object’s flattened keys into each new object.

// Convert

{

"a": {

"b": 1

}

}// Into...

{

"a.b": 1

}Use Cases

- Exporting API Data to CSV/Excel: This is the prime use case. APIs often return nested data (e.g., a user with a list of orders, each order having a list of items).

json-spreadtransforms this directly into rows where each row represents a single item, user data duplicated as needed. Much cleaner than manual looping and mapping. - Populating Data Grids/Tables: Front-end table components usually prefer a flat array of objects. If your data source is nested,

json-spreadcan act as the intermediary, simplifying the data structure before passing it to the UI component. - Data Normalization for Reporting: Internal tools or reporting dashboards often need data in a consistent, flat format. If you’re pulling data from various sources with inconsistent nesting,

json-spreadcan help normalize it into a predictable structure before analysis or display. - Preparing Data for Simple Databases/Storage: Sometimes you need to store denormalized data derived from a complex object.

json-spreadcan generate the individual records you might insert into a simpler table structure.

How to use it:

1. Install json-spread and import it into your project.

# Yarn $ yarn add json-spread # NPM $ npm install json-spread

// CommonJS

const jsonSpread = require('json-spread');

// ES Module

// import jsonSpread from 'json-spread';2. For browsers, grab the jsonSpread.js file from the dist folder in the package and include it via a <script> tag. It will expose a global jsonSpread function.

<script src="/dist/jsonSpread.umd.js"></script>

3. Create a new jsonSpread instance and pass your JSON objects or arrays as the first parameter to the jsonSpread() method.

const output = jsonSpread(myData) console.log(output);

4. jsonSpread accepts an options object as a second parameter:

const output = jsonSpread(myData, {

// Custom delimiter (default is '.')

delimiter: "-",

// Remove empty arrays (default is false)

removeEmptyArray: true,

// Value for empty arrays (default is null)

emptyValue: "N/A"

});FAQs

Q: What happens if two flattened properties end up with the same name?

A: If the flattening process results in identical property paths after delimiter concatenation, the latter property will overwrite earlier ones. This can happen if you have array indices or properties that contain the delimiter character. Consider using a custom delimiter that doesn’t appear in your property names.

Q: Is there a maximum depth limit for nested objects?

A: Technically, JSON-Spread can handle any level of nesting supported by JavaScript itself. However, extremely deep nesting (hundreds of levels) may cause performance issues or reach JavaScript’s call stack limits due to the recursive nature of the processing.

Q: Does JSON-Spread modify the original object?

No, JSON-Spread operates non-destructively and returns a new array of objects without modifying the input data.

How do I handle large datasets that might cause memory issues?

A: For very large datasets, consider processing in batches or implementing a streaming approach by breaking your input into manageable chunks and processing them sequentially.